Voices from our academic community - The (partial) replacement of human directors by AI-systems

Voices from our academic community

We are happy to share the external research of Florian Veys, student in Master of Laws at the University of Ghent. This research offers new insights that are useful for companies and their directors. The full study (in Dutch) is available at the following link.

Introduction

The growing importance of Artificial Intelligence (AI) and its impact on corporate boards is becoming increasingly evident. Understanding its implications has become – or should be – an urgent priority for companies and directors alike. This research addressed four key questions: (i) whether the use of AI in corporate boards is permitted today, (ii) to what extent directors should rely on AI, (iii) which tasks AI can assume from directors, and (iv) how liability should be managed when AI systems make corporate decisions.

Novel Contributions and Originality of the Study

Technique of Incorporation

Our research demonstrates that, at present, it is not legally possible in Belgium to replace human directors with AI systems. However, it must be said that directors may rely on AI in their decision-making, so long as they do not delegate their core powers.

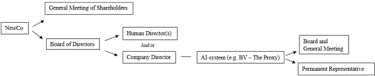

Nonetheless, a theoretical alternative could be considered. One way to achieve this is by incorporating the AI system into a company, such as a limited liability company (BV). This company would serve as a Special Purpose Vehicle, effectively acting as a proxy, and would be appointed as a company director in the board of the company to be governed. In this manner, the AI system would function de facto as the director of the company. However, this structure requires the designation of a permanent representative, who must be a natural person. Consequently, complete exclusion of human involvement is not possible. This construction – referred to in the research as the ‘technique of incorporation’ – is shown in Figure 1.

Fig. 1. Technique of Incorporation

Minimalist versus Maximalist Approach

Our research has also taken a position in the debate concerning the scope of tasks that AI systems can perform within corporate boards. There is consensus that such systems can meaningfully undertake administrative tasks. However, we argue that AI systems can also perform non-administrative tasks, often referred to as ‘judgment work’. This is based on the premise that corporate interests (vennootschapsbelang/intérêt de la société) can be shaped through proper goal setting and an appropriate balancing of interests.

Our research aligns with the maximalist approach, albeit in a more moderate form, recognizing that certain sectors, especially those rich in data like mergers and acquisitions (M&A), appear more suitable to AI decision-making. For example, the TietoEVRY Group’s AI system, ‘Alicia T’, introduced in 2016, support management by analyzing sustainability impacts, tracking CO² emissions, preparing reports and aiding due diligence processes. These tasks are heavily data-driven, highlighting the potential of AI-assisted decision-making in such fields. Conversely, sectors where decisions rely on creativity or intuition, beyond mere data analysis, may be less suited for AI intervention. While AI systems, including techniques like affective computing, can mimic emotional and behavioral cues, these factors are not always central to decision-making across all industries.

Strategic Value for Companies and Boards

The use of an AI system can assist the board in (i) efficiently processing data and (ii) detecting irregularities.

(i) The often limited time available to directors for decision-making, combined with the overwhelming amount of information, presents a compelling case for the introduction of AI in governance to effectively fulfill the oversight function. AI is able to process data much more efficiently (and in a shorter time) than human directors. As a result, it can identify patterns and correlations that may be missed by human directors, leading to better decision-making.

(ii) Additionally, AI is able to identify potential shortcomings in the company at an early stage by analyzing data and information to detect patterns. Furthermore, considering the Caremark test (U.S. case law), which requires a formal information and reporting system, it is not unreasonable to think that an AI system could perfectly satisfy this requirement.

Corporate Governance Implications

Duty of care

The Belgian Code of Companies and Associations has explicitly incorporated the principle of the duty of care (zorgvuldigheidsplicht/obligation de diligence). In general terms, the duty of care is described as acting in the manner that any normal, prudent, and diligent person – or director – would. The research delves deeper into how the duty of care is reflected in the board’s oversight function. The oversight function – or monitoring duty – is considered by many legal scholars as the core responsibility of a company’s board. The board is required to establish an information and reporting system that ensures it is promptly informed when intervention is needed, including between formal meetings.

The design of this system is a policy decision of the board, which will decide concretely based on various factors, including the company’s activities. The duty of care, which involves the proper collection and processing of information for informed decision-making, may soon require directors to rely on AI systems due to the rapid advancements in technology and its growing accessibility. Consequently, not utilizing this technology will become increasingly difficult to justify.

Groupthink and independent directors

AI systems can counteract groupthink by serving as impartial voices, unaffected by social pressure or conflicts of interest. They are able to assist independent directors in forming unbiased judgments and help promote greater independence in decision-making. Additionally, AI enables independent directors to be more proactive in their roles, contributing to more effective oversight. Independent directors are often known to hold positions on multiple boards, where decisions sometimes need to be made quickly. As outsiders to the company, they may not have the time to process all relevant information. AI can assist by quickly distilling the crucial information, which can, in turn, lead to increased engagement.

Short-termism and shareholder-centered approach

With goal setting and balancing of interests in mind, the issue of short-termism could be partially mitigated by AI. By programming the AI system to incorporate long-term objectives, the system can account for these goals in decision-making, regardless of incentives or pressures to prioritize short-term thinking. Regarding a shareholder-centered approach, the AI system can be instructed to consider the interests of various stakeholders and assign different weights to those interests. For example, a company with specific environmental goals might choose to give environmental issues greater weight in its assessment. This demonstrates that AI has the flexibility to be tailored to the specific priorities of a company, allowing it to address stakeholder interests more objectively and effectively.

Liability arising from AI decision-making

The study also explores and assesses different strategies for managing liability when harm arises from AI decision-making such as:

- The AI-system acting as a de facto director (feitelijke bestuurder/dirigeant de fait)

- Product liability and AI-systems

- Delegation as defence (delegatie/délégation)

- Exculpation as defence (disculpatie/disculpation)

In doing so, it proposes several recommendations for lawmakers, including:

- Mandatory insurance coverage

- System of permanent representation

Fig. 2. Evaluation of Liability Regimes